Sandboxing Untrusted Code

October 19, 2019 | 8 min read

I’m running GolangCI SaaS service for static code analysis in Cloud. The service allows running custom user-defined commands before analysis (e.g. like TravisCI does).

I needed to find a way to run untrusted code on our servers.

Business Requirements

- User-defined commands should be run inside a Docker container for users’ convenience: they could easily test these commands locally in the same environment.

- Builds (sequence of user-provided commands) should be isolated from each other: the hacker must not have any possibility to access data from another build. It’s critical because our clients trust us private repositories for analysis.

I think it’s a typical task for a lot of CI-like SaaS services so this article can be helpful.

Docker Containers as a Sandbox

Docker containers are not isolated from each other like VMs. In most cases and if you harden containers - they are safe. But an attack surface is huge: Linux kernel and Docker Engine.

This means you are at the mercy of Linux privilege escalation exploits. We only need to go back to October 2016 to see the Dirty COW exploit in the wild, with simple PoCs that, executed in a container, will get you root on the outer box: scumjr/dirtycow-vdso.

These bugs are rare, taken with extreme seriousness, and tend to be patched by the time they are announced. But for highly sophisticated attackers, they’ll know about such exploits long before they’re announced.

Some of SaaS services use Docker as a sandbox for untrusted code. But Docker containers need to be carefully hardened to be used as a sandbox:

- downgrade to nonprivileged user

- configure Seccomp and Apparmor

- harden Docker host machine: update OS, Docker.

See official Docker doc about security.

Even if you perform it perfectly there will be risks in supporting such a system: you should quickly apply all security fixes. I don’t think it’s possible in a small company where there are no 24/7 operations team, security alerts, and audits.

Therefore I decided to not use just Docker containers as a sandbox in GolangCI.

VM as a Sandbox

The one feature a VM usually has is that hardware isolated at the chip level through actual instructions: Intel VT-x or AMD-V. We can consider VMs (virtualization) a fully isolated environment for untrusted code.

Create VMs manually

We can start QEMU, VirtualBox VMs and run builds inside them. It will be secure. But it’s difficult to manage and scale:

- It would be much easier to develop if we had API for creating VMs and didn’t run shell commands for it.

- We need to write additional code to stop VMs after the job done in a guaranteed way: we could get a network timeout for stopping operation. So we need some TTL or monitoring job.

- You will likely run these VMs inside other VMs (AWS, Google Cloud). Performance of nested virtualization is really bad.

Create VMs in Cloud

If almost every business uses AWS or Google Cloud or another cloud provider - we can use their API to spawn virtual machines, e.g. EC2 instances. Here we have maximal isolation and three issues:

- We still need to guarantee that we won’t get too many instances running because we time-outed to stop them: in the cloud, it can lead us to big paychecks.

- An EC2 instance startup time is ~1 minute. Also,

docker pulltakes some time. - We can spawn and delete instances (VMs) by API but we still need to execute SSH commands to perform

docker pullanddocker run.

Provision VMs in Kubernetes

GolangCI is deployed in a Kubernetes cluster in GKE and a lot of startups use Kubernetes nowadays.

It can be convenient to manage VMs through Kubernetes. Projects like kubevirt or virtlet do that.

I didn’t choose this way but it looks promising.

MicroVM as a Sandbox

MicroVMs are lightweight virtual machines. Typically they have startup time under 200ms.

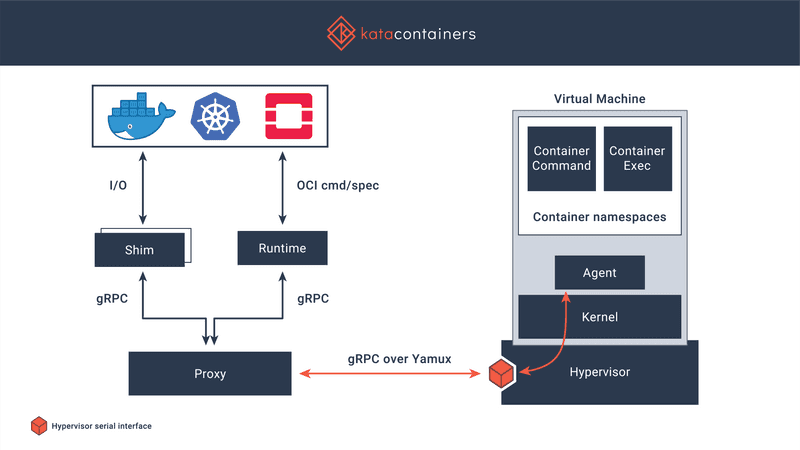

Kata Containers (Clear Containers)

Kata Containers is an OCI-compliant container runtime that executes containers within QEMU based virtual machines. Conveniently, we can use it with regular Docker containers. It’s compatible with Kubernetes.

I’ve decided to test it on Google Cloud VMs. I did a build and static analysis of a sample Go project under normal and Kata Docker containers. It worked 10x slower with Kata runtime. I guess it was caused by nested virtualization: GCE machines are VMs and Kata is VM inside VM. If we can run Docker on bare metal instances it can be much more effective.

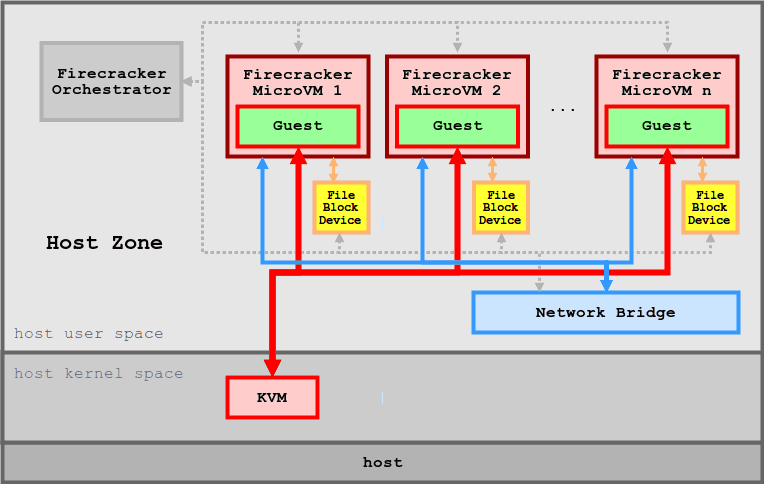

AWS Firecracker

Firecracker is a cloud-native alternative to QEMU that is purpose-built for running containers safely and efficiently, and nothing more. Firecracker provides a minimal required device model to the guest operating system while excluding non-essential functionality. This, along with a streamlined kernel loading process enables a < 125 ms startup time and a reduced memory footprint.

Firecracker doesn’t use QEMU: instead, they implemented their own lightweight VMM using Rust language. Therefore Firecracker can be considered not as Kata Containers alternative but as a QEMU alternative. Moreover, Kata Containers support Firecracker.

There is also containerd runtime for Firecracker.

I didn’t test it because I understood it will be slow due to nested KVM virtualization. Bare metal servers are too expensive for testing. But I decided to test it on AWS Fargate (AWS Fargate and Lambda use Firecracker): see results below.

Nabla

Nabla Containers are similar to Kata containers and Firecracker. There is also OCI runtime for it.

I didn’t test it for the same reason: nested virtualization slow-down.

Other Approaches

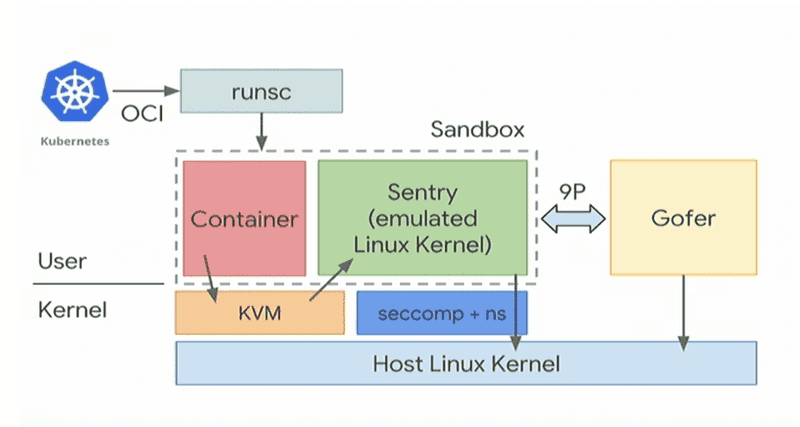

gVisor

gVisor is a user-space kernel, written in Go, that implements a substantial portion of the Linux system surface. It includes an Open Container Initiative (OCI) runtime called runsc that provides an isolation boundary between the application and the host kernel. The runsc runtime integrates with Docker and Kubernetes, making it simple to run sandboxed containers.

The gVisor doesn’t use virtualization, therefore:

- there are no nested virtualization issues

- container startup time is very fast - no need to boot VM

It looks promising and it’s used by Google App Engine for sandboxing. So, it looks like a stable and secure product. I’ve tested a sample Go project building with it on GCE VM. It worked 4-10x slower than raw containers:

$ sudo docker run --runtime=gvisor -w /goapp -v ~/go.mod:/goapp/go.mod -it -e GO111MODULE=on golang bash -c "time go mod download"

real 0m9.637s

user 0m4.190s

sys 0m2.470s

$ sudo docker run -w /goapp -v ~/go.mod:/goapp/go.mod -it -e GO111MODULE=on golang bash -c "time go mod download"

real 0m2.579s

user 0m0.564s

sys 0m0.204sThere is a quantitive comparison between Kata Containers and gVisor. Its results show similar things: with CPU heavy workload speed is similar. But gVisor can’t even finish some tests on IO heavy workload.

I’m waiting until new VFS will be implemented: it can dramatically speed up things.

Novm

Novm is an experimental KVM-based VMM for containers, written in Go. It’s similar to gVisor. The latest commit was in 2015 so I decided to not test it as the not maintained project.

Heroku

Heroku has a conception of lightweight containers called “Dyno”. We can run them dynamically. But their pricing was too high for us.

Google Cloud Run

Google Cloud Run uses gVisor for sandboxing. I’ve already tested it so no need to test it another time.

AWS Lambda

AWS Lambda looked like a good choice. It uses Firecracker for isolation. But for the GolangCI specific task, it has too low memory limit 3GB.

AWS Fargate

AWS Fargate runs containers in an isolated environment. It uses Firecracker too but it has a higher memory limit. I’ve tested its performance: Go building tasks are just 2x slower than in raw containers. It was acceptable for us and much better than 10x slow-down with gVisor.

Also, Fargate doesn’t cache Docker images. If you don’t use a fixed pool of EC2 instance each container creation needs to pull Docker image. For our 320MB Docker image it takes 40-70s to pull it.

Conclusion

- I’ve decided to use AWS Fargate for GolangCI.com sandboxing. It works for months in production (thousands of builds) and I had no problems with it except the absence of Docker images caching.

- I’m waiting until gVisor will be as fast as AWS Firecracker on bare metal (AWS Lambda and AWS Fargate). I would prefer gVisor because I can save 1 minute for each build on Docker image pulling and I would like to integrate it with Kubernetes.