How I've Set Up HA PostgreSQL on Kubernetes

March 13, 2019 | 10 min read

Why Do I Need PostgreSQL on Kubernetes

I’ve decided to migrate GolangCI from Heroku to Kubernetes. Before that GolangCI used Heroku PostgreSQL as the primary database. The popular way to migrate is to use managed cloud PostgreSQL service like AWS Aurora or Google Cloud SQL. For example, Algolia migrated from Heroku to GKE and used Google Cloud SQL. But I needed a fully controlled solution because GolangCI has an on-premise version that should work on a local Kubernetes cluster without access to AWS or Google Cloud.

With the Helm I Will Do it in 5 minutes

I was new to Kubernetes so first I have tried to install PostgreSQL by helm install stable/postgresql. This Helm chart uses a bitnami image. Bitnami has an article about using this image for making production-ready PostgreSQL on Kubernetes.

Soon I realized that this installation has not production-ready config and we need to install it like this:

helm install --name pg --namespace postgres -f ./values-production.yaml stable/postgresql

kubectl -n postgres scale statefulset my-postgresql-slave --replicas=3Where is the Automatic Master Failover

HA PostgreSQL should automatically survive and recover if a node with a database master (primary) crashes. With the pure Kubernetes solution from the previous step it works in the following way:

- a PostgreSQL master pod or node crashes

- Kubernetes runs a healthcheck every 10 seconds by default. We can tune

periodSecondsand run a healthcheck every second (it’s the minimum interval). In one second after the crash Kubernetes detects it. - Kubernetes schedules a new pod for the PostgreSQL master server. If a node crashed it may need to run a new node first. If just a pod failed Kubernetes can just restart a pod.

- PostgreSQL master starts

This pure Kubernetes HA solution has an advantage of the simplicity of setup. But it has the following drawbacks:

- we have downtime between steps 1 and 4 and the downtime can be minutes if Kubernetes needs to restart a node

- no stable and trusted cross-zone availability

- PostgreSQL master run in a StatefulSet with persistent volumes.

- By default persistent volumes exist only in one zone. If the full zone outage occurs we can’t automatically failover the master: Kubernetes pod with the master can’t be scheduled in another availability zone.

- AWS EBS doesn’t support cross-zone replication of disks. Google Cloud does support regional persistent disks but it’s the beta feature.

- I don’t trust Cross-Zone High Availability based on a closed-source disk replication. Better if HA based on open-source consensus algorithms. In this paper authors don’t call the “modern HA” this solution because it’s based on opaque disk replication and not consensus.

There is an interesting comparison of pure Kubernetes and Stolon HA. Stolon is the HA wrapper for PostgreSQL that can work on Kubernetes. We will look at it later.

Choosing Cross DC Consensus Based HA PostgreSQL

I decided that I need cross-datacenter (cross-zone) HA PostgreSQL solution based on consensus. There are two primary choices:

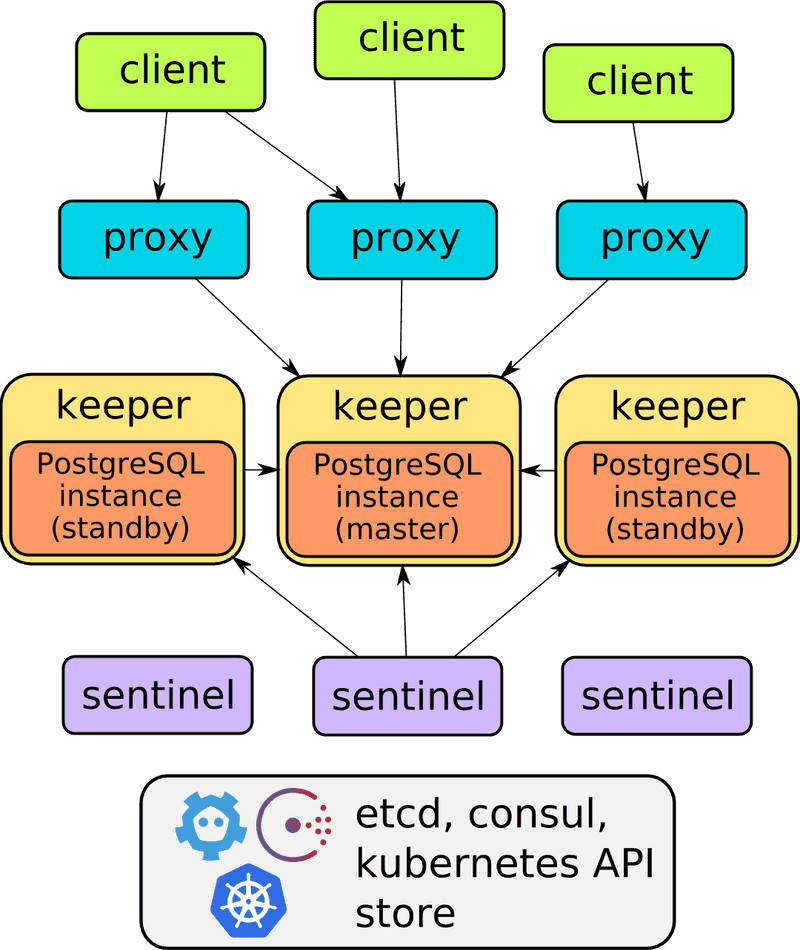

Stolon has the following architecture:

Both projects are similar by supported features. The small comparison:

- Patroni is supported by Postgres Operator

- Both of projects support sync replication: patroni and stolon.

- Both of them support quorum-based sync replication (but not natively by PostgreSQL): patroni and stolon

I’ve chosen (like lwolfs) the Stolon because it has more clear for me architecture.

Running Stolon PostgreSQL on Kubernetes

Set up

The steps I performed to set up Stolon PostgreSQL in k8s with Helm:

- Make a copy of values.yml (I’ve used it from this commit).

- Create secrets for PostgreSQL superuser and replication in a namespace

postgres:

Superuser:

kubectl create secret generic pg-su \

--namespace postgres \

--from-literal=username='su_username' --from-literal=password='su_password'and set them:

superuserSecret:

name: pg-su

usernameKey: username

passwordKey: passwordReplication:

kubectl create secret generic pg-repl \

--namespace postgres \

--from-literal=username='repl_username' --from-literal=password='repl_password'and set them:

replicationSecret:

name: pg-repl

usernameKey: username

passwordKey: password- Remove not needed parts from

values.yaml:

superuserUsername: "stolon"

## password for the superuser (REQUIRED if superuserSecret is not set)

superuserPassword:

replicationUsername: "repluser"

## password for the replication user (REQUIRED if replicationSecret is not set)

replicationPassword:- Configure replication. Replace

clusterSpec: {}

# sleepInterval: 1s

# maxStandbys: 5with the

clusterSpec:

synchronousReplication: true

minSynchronousStandbys: 1 # quorum-like replication

maxSynchronousStandbys: 1 # quorum-like replication

initMode: new- Set pod disruption budget

podDisruptionBudget:

minAvailable: 2- Configure

pgParametersand set replica count to 3 (total 3 instances: 1 master, 1 sync standby and 1 async standby):

pgParameters:

max_connections: 100

# ...

keeper:

# ...

replicaCount: 3

# ...

proxy:

# ...

replicaCount: 3

# ...

sentinel:

# ...

replicaCount: 3- Set up Prometheus service discovery annotations:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"- Install the helm chart

$ helm install --name pg --namespace postgres -f local-values.yml ~/charts/stable/stolon/

NAME: pg

LAST DEPLOYED: Thu Feb 28 13:16:20 2019

NAMESPACE: postgres

STATUS: DEPLOYED

RESOURCES:

==> v1/ServiceAccount

NAME SECRETS AGE

pg-stolon 1 5s

==> v1beta1/Role

NAME AGE

pg-stolon 5s

==> v1beta1/RoleBinding

NAME AGE

pg-stolon 5s

==> v1/Service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

pg-stolon-keeper-headless None <none> 5432/TCP 5s

pg-stolon-proxy 10.39.250.171 <none> 5432/TCP 5s

==> v1beta2/Deployment

NAME KIND

pg-stolon-proxy Deployment.v1beta2.apps

pg-stolon-sentinel Deployment.v1beta2.apps

==> v1beta2/StatefulSet

pg-stolon-keeper StatefulSet.v1beta2.apps

==> v1beta1/PodDisruptionBudget

NAME MIN-AVAILABLE MAX-UNAVAILABLE ALLOWED-DISRUPTIONS AGE

pg-stolon-keeper 2 N/A 0 5s

pg-stolon-proxy 2 N/A 0 5s

pg-stolon-sentinel 2 N/A 0 5s

==> v1/ConfigMap

NAME DATA AGE

pg-stolon 0 5s

NOTES:

Stolon cluster installed and initialized.

To get superuser password run

PGPASSWORD=$(kubectl get secret --namespace postgres pg-su -o jsonpath="{.data.password}" | base64 --decode; echo)- Check the installed cluster:

$ kubectl -n postgres get all

NAME READY STATUS RESTARTS AGE

pod/pg-stolon-create-cluster-mhmxm 0/1 Completed 0 4m

pod/pg-stolon-keeper-0 1/1 Running 0 4m

pod/pg-stolon-keeper-1 1/1 Running 0 4m

pod/pg-stolon-proxy-6f648b49d4-cnnpz 1/1 Running 0 4m

pod/pg-stolon-proxy-6f648b49d4-rsv8j 1/1 Running 0 4m

pod/pg-stolon-sentinel-694cf8f76f-8fmr9 1/1 Running 0 4m

pod/pg-stolon-sentinel-694cf8f76f-d5f6r 1/1 Running 0 4m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.39.240.1 <none> 443/TCP 3d

service/pg-stolon-keeper-headless ClusterIP None <none> 5432/TCP 4m

service/pg-stolon-proxy ClusterIP 10.39.250.171 <none> 5432/TCP 4m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/pg-stolon-proxy 2 2 2 2 4m

deployment.apps/pg-stolon-sentinel 2 2 2 2 4m

NAME DESIRED CURRENT READY AGE

replicaset.apps/pg-stolon-proxy-6f648b49d4 2 2 2 4m

replicaset.apps/pg-stolon-sentinel-694cf8f76f 2 2 2 4m

NAME DESIRED CURRENT AGE

statefulset.apps/pg-stolon-keeper 2 2 4m

NAME DESIRED SUCCESSFUL AGE

job.batch/pg-stolon-create-cluster 1 1 4mand show distribution amongst nodes:

$ kubectl -n postgres get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

pg-stolon-create-cluster-mhmxm 0/1 Completed 0 6m 10.36.2.28 gke-primary-services-default-pool-8dd9a533-3tkl

pg-stolon-keeper-0 1/1 Running 0 6m 10.36.2.29 gke-primary-services-default-pool-8dd9a533-3tkl

pg-stolon-keeper-1 1/1 Running 0 6m 10.36.2.30 gke-primary-services-default-pool-8dd9a533-3tkl

pg-stolon-proxy-6f648b49d4-cnnpz 1/1 Running 0 6m 10.36.2.27 gke-primary-services-default-pool-8dd9a533-3tkl

pg-stolon-proxy-6f648b49d4-rsv8j 1/1 Running 0 6m 10.36.0.30 gke-primary-services-default-pool-8dd9a533-s2vg

pg-stolon-sentinel-694cf8f76f-8fmr9 1/1 Running 0 6m 10.36.0.29 gke-primary-services-default-pool-8dd9a533-s2vg

pg-stolon-sentinel-694cf8f76f-d5f6r 1/1 Running 0 6m 10.36.1.18 gke-primary-services-default-pool-8dd9a533-h4zsErrors I've caught during at first attempts

First, I got: 2019-03-01 06:19:51.982 GMT [108194] LOG: unrecognized configuration parameter "maxConnections" in file "/stolon-data/postgres/stolon-temp-postgresql.conf" line 6

<br/>

2019-03-01 06:19:51.982 GMT [108194] FATAL: configuration file "/stolon-data/postgres/stolon-temp-postgresql.conf" contains errors

<br/>

2019-03-01T06:19:52.172Z ERROR cmd/keeper.go:1083 failed to start instance {"error": "postgres exited unexpectedly"}

<br/>

2019-03-01T06:19:53.897Z ERROR cmd/keeper.go:641 cannot get configured pg parameters {"error": "dial unix /tmp/.s.PGSQL.5432: To fix it I’ve changed

pgParameters:

maxConnections: 100to

pgParameters:

max_connections: "100"After that I got an error because of unknown sha256sum in a template:

annotations:

checksum/config: {{ include (print .Template.BasePath "/hooks/update-cluster-spec-job.yaml") . | sha256sum }}To fix it I’ve updated Helm to the latest version.

Test the Cluster

Check Simple Commands

Find pg cluster ip:

$ kubectl -n postgres get services | fgrep proxy

pg-stolon-proxy ClusterIP 10.39.252.208 <none> 5432/TCP 18mfind password from the secret:

echo $(kubectl get secret --namespace postgres pg-su -o jsonpath="{.data.password}" | base64 --decode)connect to PostgreSQL through the proxy and create the table:

$ kubectl -n postgres exec -it pg-stolon-keeper-0 -- psql --host 10.39.252.208 --port 5432 --username superuser_name -W

test=# CREATE DATABASE test;

$ kubectl -n postgres exec -it pg-stolon-keeper-0 -- psql --host 10.39.252.208 --port 5432 --username superuser_name -W -d test

test=# create table test (id int primary key not null, value text not null);

CREATE TABLE

test=# insert into test values (1, 'value1');

INSERT 0 1

test=# select * from test;

id | value

----+--------

1 | value1

(1 row)Check a Master Death

Find out the master pod: pg-stolon-keeper-0 is the master because pg_is_in_recovery is false for it.

$ kubectl -n postgres exec -it pg-stolon-keeper-0 -- psql --host 127.0.0.1 --port 5432 --username superuser_name -W -d test

test=# select pg_is_in_recovery();

pg_is_in_recovery

-------------------

f

(1 row)Check the master death: connect to the current master in keeper-0 through the proxy:

$ kubectl -n postgres exec -it pg-stolon-keeper-0 -- psql --host 10.39.252.208 --port 5432 --username superuser_name -W -d test

test=# select * from test;

id | value

----+--------

1 | value1

(1 row)Don’t close this SQL shell and in another window run:

$ kubectl -n postgres delete pod pg-stolon-keeper-0

pod "pg-stolon-keeper-0" deletedLet’s check logs of sentinels:

2019-03-01T08:25:38.090Z WARN cmd/sentinel.go:264 no keeper info available {"db": "66ced60c", "keeper": "keeper0"}

2019-03-01T08:25:43.140Z WARN cmd/sentinel.go:264 no keeper info available {"db": "66ced60c", "keeper": "keeper0"}

2019-03-01T08:25:48.201Z WARN cmd/sentinel.go:264 no keeper info available {"db": "66ced60c", "keeper": "keeper0"}

2019-03-01T08:25:53.412Z WARN cmd/sentinel.go:264 no keeper info available {"db": "66ced60c", "keeper": "keeper0"}

2019-03-01T08:25:58.462Z INFO cmd/sentinel.go:976 master db is failed {"db": "66ced60c", "keeper": "keeper0"}

2019-03-01T08:25:58.462Z INFO cmd/sentinel.go:987 trying to find a new master to replace failed master

2019-03-01T08:25:58.463Z INFO cmd/sentinel.go:1013 electing db as the new master {"db": "6784fde9", "keeper": "keeper1"}

2019-03-01T08:26:08.564Z INFO cmd/sentinel.go:1132 removing old master db {"db": "66ced60c", "keeper": "keeper0"}

2019-03-01T08:26:08.564Z INFO cmd/sentinel.go:1218 one of the new synchronousStandbys has been removed {"db": "66ced60c", "inSyncStandbys": [], "synchronousStandbys": ["66ced60c"]}

2019-03-01T08:26:08.565Z INFO cmd/sentinel.go:1230 setting the expected sync-standbys to the current known in sync sync-standbys {"inSyncStandbys": [], "synchronousStandbys": ["66ced60c"]}we see that keeper-1 became a new master, check it:

$ kubectl -n postgres exec -it pg-stolon-keeper-1 -- psql --host 127.0.0.1 --port 5432 --username superuser_name -W -d test -c 'select pg_is_in_recovery()'

pg_is_in_recovery

-------------------

f

(1 row)kubernetes restarts a pod:

$ kubectl -n postgres get pods | fgrep keeper

pg-stolon-keeper-0 1/1 Running 0 1m

pg-stolon-keeper-1 1/1 Running 0 49m

pg-stolon-keeper-2 1/1 Running 0 49mbefore restart we had:

- keeper-0 - master

- keeper-1 - sync replica

- keeper-2 - async replica

check it after restart:

$ kubectl -n postgres exec -it pg-stolon-keeper-1 cat /stolon-data/postgres/postgresql.conf | fgrep sync

synchronous_standby_names = 'stolon_46cb4a21'

$ kubectl -n postgres exec -it pg-stolon-keeper-0 cat /stolon-data/postgres/recovery.conf | fgrep slot_name

primary_slot_name = 'stolon_3a667e52'

$ kubectl -n postgres exec -it pg-stolon-keeper-2 cat /stolon-data/postgres/recovery.conf | fgrep slot_name

primary_slot_name = 'stolon_46cb4a21'and after the restart we have:

- keeper-0 - async replica

- keeper-1 - master

- keeper-2 - sync replica

In PostgreSQL shell to the proxy run select again:

test=# select * from test;

FATAL: terminating connection due to administrator command

server closed the connection unexpectedly

This probably means the server terminated abnormally

before or while processing the request.

The connection to the server was lost. Attempting reset: Succeeded.

test=# select * from test;

id | value

----+--------

1 | value1

(1 row)and we see that proxy closed connection to the old master as we expected.

Notes

I should note two things:

- when killing master Stolon re-elected master faster than pod was restarted.

- the downside is that proxy goes only to a master, we need to set up

haproxyto go to slaves too.

Conclusion

This Stolon setup is running in the production of GolangCI now. We haven’t encountered any problems with it yet.